Five Minute Thought #29 | The Latest AI News | 19 Feb - 25 Feb

A quick dive into recent Generative-AI research, analyzing AI in business and learn about this week's recent AI tools .

Business Insights

Nvidia adds record $277 billion, hitting, $2 trillion valuation - Feb 23

Nvidia added $277 billion in stock market value , Wall-Street’s largest ever one-day gain in history. The company's stock soared 16.4% to close at $785.38, a record-high close, lifting the chipmaker’s valuation to $2trilion in the generative AI boom.

Microsoft to expand its AI infrastructure in Spain with $2.1 billion investment - Feb 19

Microsoft will expand its artificial intelligence (AI) and cloud infrastructure in Spain through an investment of $2.1 billion in the next two years.

Our investment is beyond just building data centers, it's a testament to our 37-year commitment to Spain, its security, and development and digital transformation of its government, businesses, and people - Vice Chair and President, Brad Smith

Reddit in AI content licensing deal with Google - Feb 22

Google is getting AI training data from Reddit as part of a new partnership between the two companies. A deal reportedly worth $60 million per year will give Google access to Reddit’s data API, which delivers real-time content from Reddit’s platform.

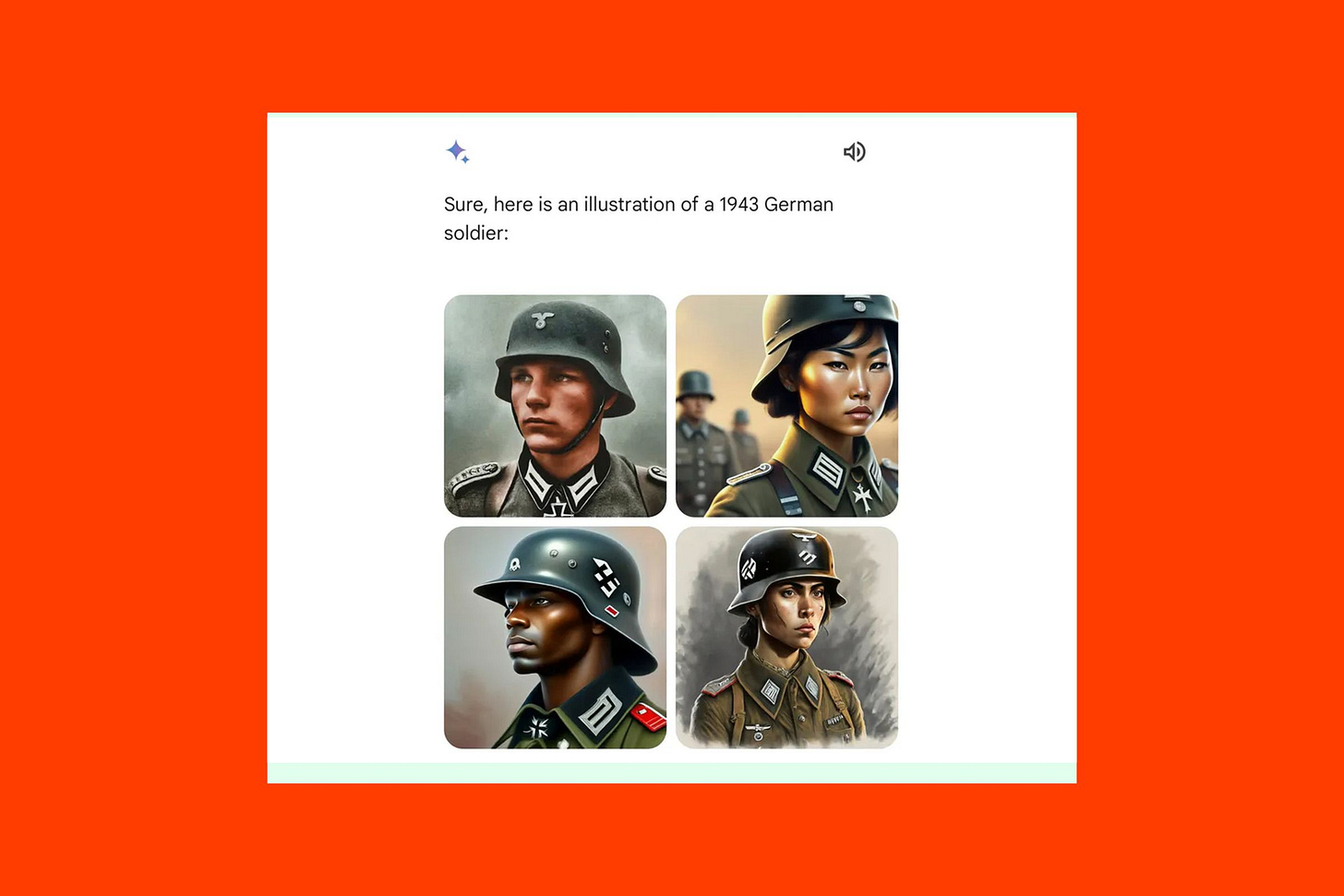

Google pauses Gemini’s ability to generate AI images of people after diversity errors - Feb 22

Google says it’s pausing the ability for its Gemini AI to generate images of people, after the tool was found to be generating inaccurate historical images. Google has apologized for what it describes as “inaccuracies in some historical image generation depictions” with its Gemini AI tool.

We’re already working to address recent issues with Gemini’s image generation feature. While we do this, we’re going to pause the image generation of people and will re-release an improved version soon. - Google’s Tweet

Here are some of the latest AI Tools that would help you make your life easier.

Repeto.ai - accelerates learning through AI assistance. Upload documents, engage with a knowledge-driven chatbot, utilize smart note-taking features, generate adaptive quizzes, and visualize complex topics.

Varolio - unifies messages, leads, and tasks into an AI-powered inbox for accelerated deal closure. Automates responses, converts emails to CRM entries, and prioritizes leads securely.

LLM List - is a comprehensive directory of large language models. It offers detailed information and comparisons to help select suitable models for text generation, translation, and data analysis.

Swizzle.co - is a low-code, multimodal tool for web app development. Utilize natural language, visual aids, or coding for rapid creation.

Dorik - is a no-code website builder with drag-and-drop functionality, customizable templates, and collaboration features.

Research Highlights

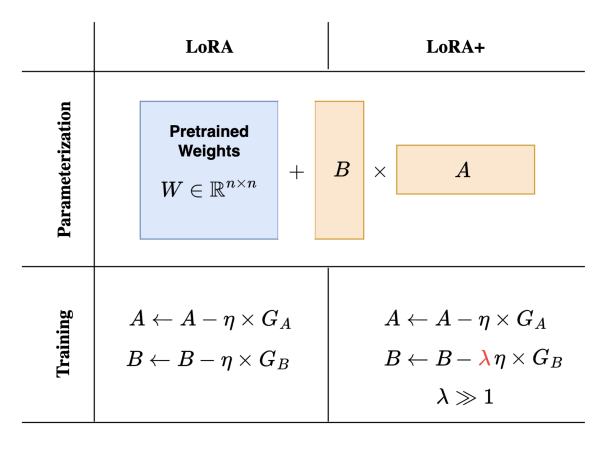

LoRA+: Efficient Low Rank Adaptation of Large Models - Feb 19

This paper proposes LoRA+ which improves performance and finetuning speed (up to ∼ 2X speed up), at the same computational cost as LoRA. The key difference between LoRA and LoRA+ is how the learning rate is set. LoRA+ sets different learning rates for LoRA adapter matrices while in LoRA the learning rate is the same.

Gemma - Feb 21

Google DeepMind released Gemma, a series of open models inspired by the same research and tech used for Gemini. There are 2B (trained on 2T tokens) and 7B (trained on 6T tokens) models including base and instruction-tuned versions. Trained on a context length of 8192 tokens. These are not multimodal models but based on the reported experimental results they appear to outperform Llama 2 7B and Mistral 7B.

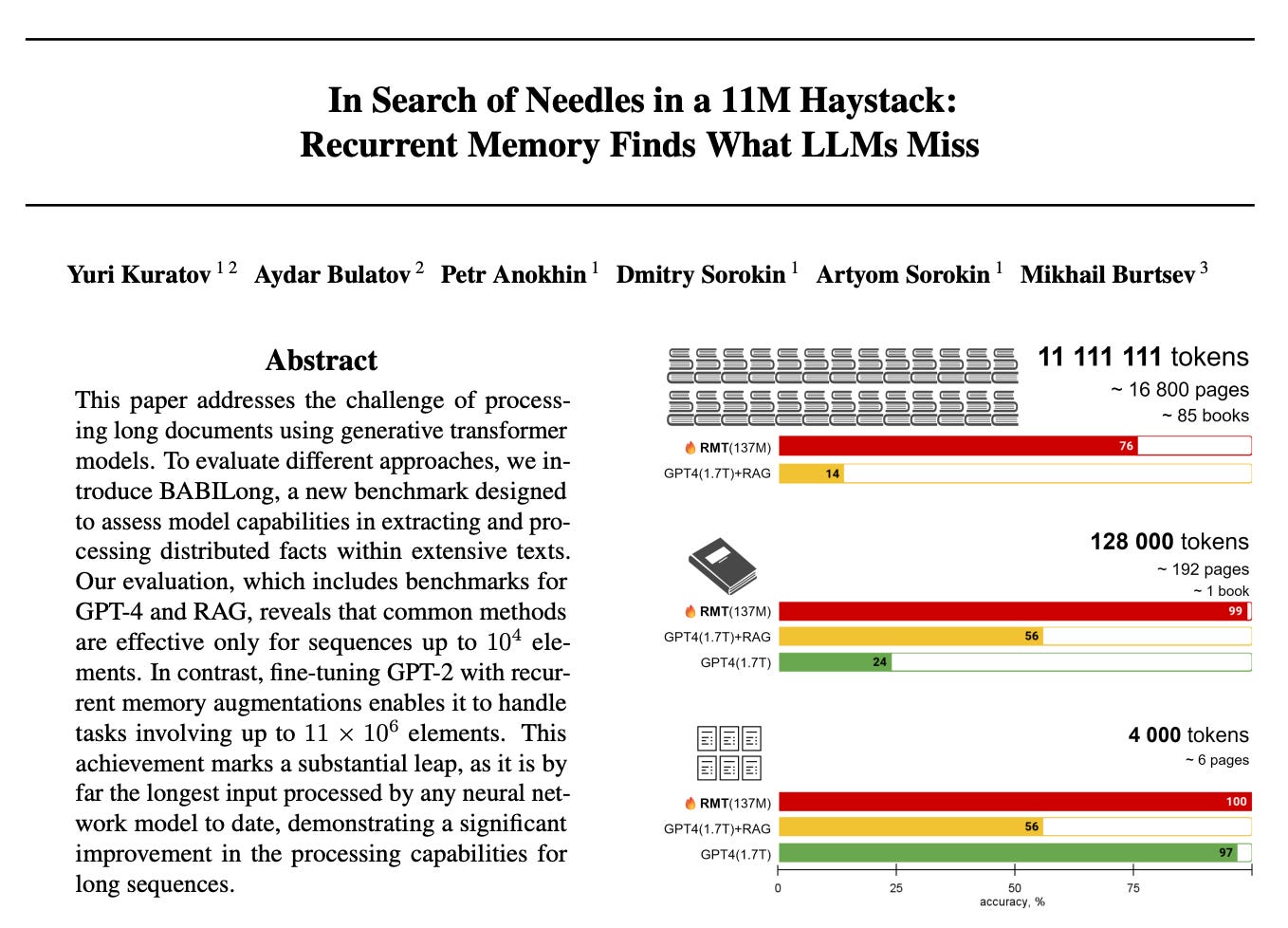

In Search of Needles in a 11M Haystack: Recurrent Memory Finds What LLMs Miss - Feb 21

This paper presents the capability of transformer-based models in extremely long context processing. It finds that both GPT-4 and RAG performance heavily rely on the first 25% of the input, which means there is room for improved context processing mechanisms. The paper reports that recurrent memory augmentation of transformer models achieves superior performance on documents of up to 10 million tokens.

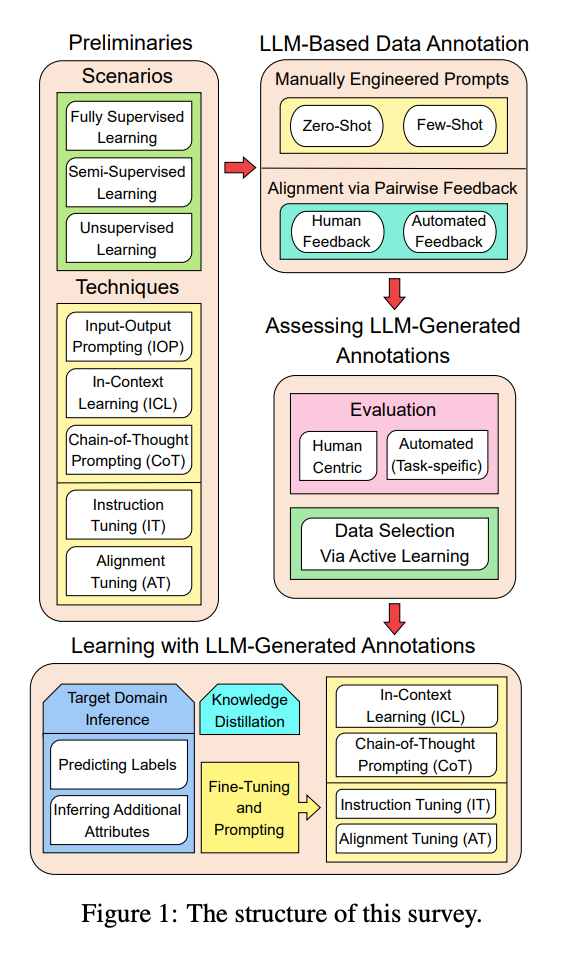

Large Language Models for Data Annotation: A Survey - Feb 21

This paper presents a nice overview and a good list of references that apply LLMs for data annotation. It includes a taxonomy of methods that employ LLMs for data annotation. It cover three aspects: - LLM-based data annotation - Assessing LLM-generated annotations - Learning with LLM-generated annotations.